wrong again

for real this time

Being wrong about something (and wrong for sure not just some opinion impedance mismatch) is humbling.

I found out I was wrong about something today. I fucked up a migration 6 weeks ago. And some records were missing a crucial property that meant some customers’ metering information didn’t make it through the billing system.

Impact was low: thousands of dollars across dozens of subscriptions in the July billing cycle. Not a big deal and management seniors have been great about it.

But what a big deal it is to me.

I made model in my head, I built it out in code, I littered that code with asserts that ensured the model’s properties were upheld. ✅ ✅ ✅

I ran the code and spot checked the results. ✅ ✅

I was sure the job was good.

I was wrong.

Then on the way home I’m listening to The Free Press. And I’m stunned when Amanda Knox describes how her prosecutor was wrong about her guilt.

He based his whole prosecution on the faulty conviction that the criminal who broke in would never break in through that particular window … wtf? 😳 🤷

I feel better and worse: my wrongness and small thousands 💲 is nothing. His wrongness cost this woman years in prison and years more of existential pain.

He was sure he was right. An entire judicial system agreed with him for a time.

But he was wrong.

Why do we do it? What causes us to lose uncertainty and commit so fully to models and stories that turn out to be so clearly false? (time to re-read Kahneman I guess)

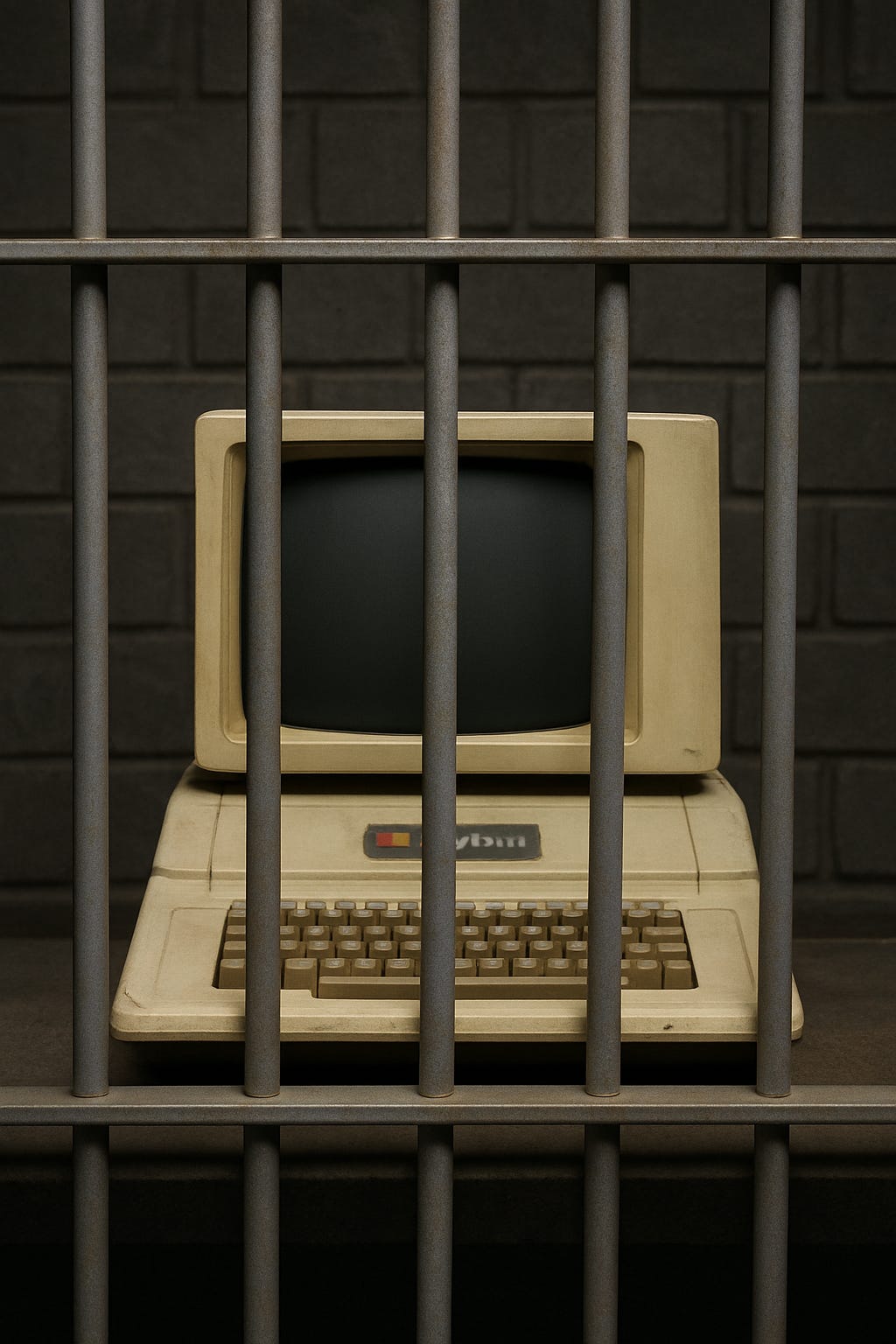

And is it not super weird that LLMs do it too? Incredibly useful; occasionally confidently, perhaps catastrophically, wrong”. Just like us.

But here’s the scary thing. LLMs can be wrong just like humans. But only humans can be accountable.

Marc Andreessen is right… LLMs are new computers.